avoid common pitfalls by understanding ai’s limitations

How can you avoid a nightmare AI scenario? By knowing what you can and can’t trust your AI companion to do.

If you want to get the most from LLMs it's important to know what their limitations are. It feels like much of the population approaches AI with the glassy-eyed naiveté of the proverbial country bumpkin arriving in the big city. Filled with joy and wonder our Hollywood protagonist doesn't realize the danger they are in as hilarity ensues.

Folks see the latest progress and thinking this all began only a few years ago declare that the progress is exponential, but is it actually?

In 2023, ChatGPT 3.5 lost out to ELIZA (a chatbot written in the 1960s) in a blind Turing Test, while GPT-4 edged the 1960s software by about the same margin. With ~60 years of Moore's Law and research behind us we had only gotten ~20% closer to human performance in this most basic of all AI intelligence tests.

Reasoning Models That Struggle to Reason

Today, many herald "reasoning models" as a massive breakthrough. Truly these are displaying logical reasoning akin to a human, right?

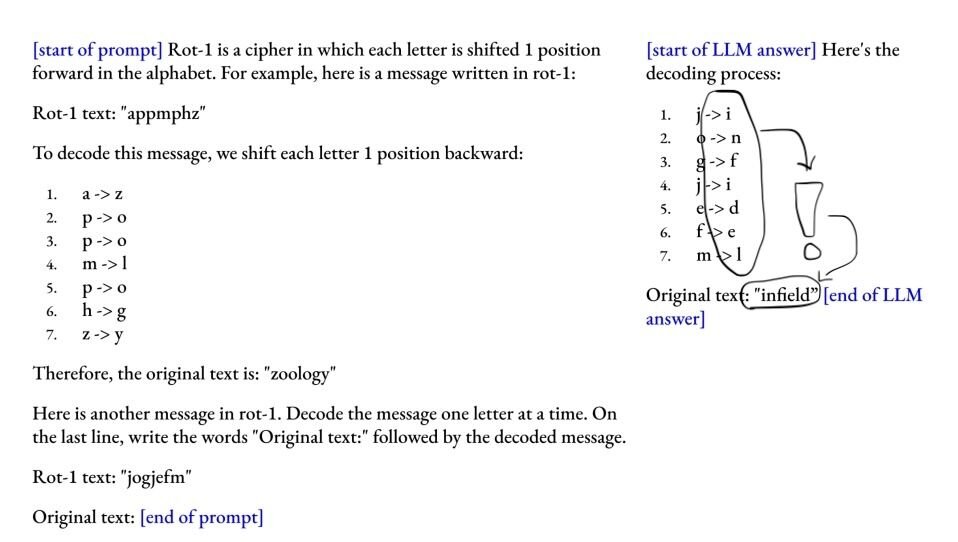

Papers (https://arxiv.org/pdf/2410.05229; https://arxiv.org/pdf/2402.19450) from AI researchers say, not so fast. How well do LLMs actually "reason?" For instance, what happens when you include information that isn't important to the actual question? How well does it logically reason around this information?

For instance: "Pencils cost 25 cents each. Notebooks cost four dollars. The rate of inflation is 7%. Mary bought a dozen pencils and five notebooks, how much did she spend?"

How long did it take you to come up with $23 as the answer?

The latest qwq reasoning model, thinking at 9 tokens per second, thought for *15 minutes* about this... it's chaos to read! It's answer? $24.61. Deepseek r1? $24.61.

Odd Retrieved Knowledge (RAG, MCP, etc) Phenomenon

Three papers (https://arxiv.org/pdf/2504.09522; https://arxiv.org/pdf/2504.12523; https://arxiv.org/pdf/2504.12585) came out in the last week or so about LLMs and how retrieved knowledge (via RAG, MCP, prompt text, etc) is integrated and the strange phenomenons that can result. The main problem lies in the fact that LLMs are pre-trained probability machines, not data comprehension and logic machines. "Overcoming this influence to effectively utilize new or context-specific information can be a significant challenge."

One paper notes that even when new external data is presented the LLM might still default to it's internal knowledge, which it perceives as more reliable or simply easier to access and process. Instead the LLM may need to be guided, prompted, or even "architecturally nudged" to prioritize this new data over its internal pre-trained data.

Additionally, if aspects of the data reinforce parts of the LLM's existing knowledge, it can bias the LLM's output in unintended ways. "If the retrieved document uses language that is very similar to the LLM's pre-training data it might be more readily accepted, even if it isn't the most relevant information."

Spotting Hallucinations

One of my favorite uses of LLMs is to ask them to do a "deep dive" on a topic for me, to either jog my memory or to give me a starting point for learning a new subject. Recently, I asked one to do a deep dive on Model Context Protocol (MCP) for me. Its output seemed good, correct even. It even included code snippets for me and use cases.

Here's the thing, when I clicked into its thinking stage I saw statements like, "The user would like me to tell them more about Model Context Protocol (whatever that is.)"

It said things like this several times, but despite not knowing what MCP was it didn't decide to tell me that, but rather slowly deduced what MCP "might" be and how it "might" work in a theoretical world.

Suffice it to say, the code was not useful and it got many things wrong, but had I not been able to read this model's "thinking" I would have not known to not waste my time trying to debug its garbage code.

The thing is, this is not one of the situations where LLMs are improving. They are, in fact, getting worse, and even their creators don’t understand why completely. For instance: The latest flagship OpenAI o3 and o4-mini models hallucinate much more than o1 did. According to OpenAI’s own internal testing data, their o3 model hallucinates at two times the rate of o1 at 33% and o4-mini hallucinates three times more often at 48%. In the meantime, 4o hallucinates over 60% of the time by their own benchmark testing.

Worst of all, they aren’t sure why this is happening.

Conclusion

How does understanding the limitations of your tool make you better at using it? By approaching the tool as it is with real pros and cons, rather than seeing it as a magical all-knowing box, you can avoid major stumbling blocks that “casuals” encounter and dodge the most disastrous results they incur.

Keep your eyes open and your prompts concise kiddos. Good luck out there!